The proliferation of sophisticated AI agents across industries promised a new era of automated efficiency, yet a silent, significant cost has emerged that threatens to stall this progress. While Large Language Models (LLMs) possess unprecedented reasoning capabilities, their true power is only unlocked when they can interact with real-world data and services through Application Programming Interfaces (APIs). The challenge, however, lies in the laborious, expensive, and unscalable process of building these connections. Addressing this critical issue, travel technology company Agoda has released APIAgent, an open-source tool designed to eliminate the coding overhead and transform how AI agents integrate with enterprise systems.

The Hidden Costs of AI Integration

The promise of seamlessly connected AI agents often conceals a steep “integration tax”—the hidden development and maintenance costs associated with bridging LLMs and enterprise data. This tax is not a one-time fee but a recurring expense. Every time an underlying API is updated, a corresponding custom server must be manually revised, tested, and redeployed. This constant upkeep consumes valuable engineering resources that could otherwise be dedicated to innovation, creating a significant drag on productivity.

This problem becomes exponentially more complex at an enterprise scale. For modern organizations that rely on a microservices architecture, which can involve hundreds or even thousands of distinct APIs, the conventional approach is simply untenable. The prospect of building and maintaining a unique integration server for every single service is an impractical and financially unsustainable model. This reality has created a major bottleneck, preventing companies from fully leveraging their AI investments by limiting the scope of data their agents can access.

Overcoming the Custom Code Bottleneck

The current industry standard for connecting an LLM to an external tool involves a painstaking manual process. Developers must write bespoke integration code, often in Python or TypeScript, to act as a translator. This code must then be deployed and meticulously maintained on a dedicated server. This approach is not only inefficient but also introduces significant friction into the development lifecycle, slowing down the deployment of new AI-powered features and functionalities.

In an effort to standardize this communication, the industry has seen the emergence of protocols like the Model Context Protocol (MCP). The MCP provides a common language for LLMs and tools to interact, but it does not inherently solve the underlying integration problem. The core issue APIAgent addresses is the persistent need for a separate, custom-coded MCP server for every API. By creating a universal proxy, APIAgent obviates this requirement, allowing a single, configuration-driven deployment to serve countless backend APIs without developers writing a single line of integration logic.

A Look Under the Hood at APIAgent

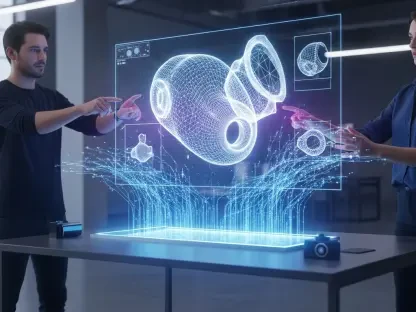

At its core, APIAgent functions as a universal proxy bridge, a centralized and reusable gateway that sits between an LLM and an organization’s backend services. A single instance of APIAgent can be deployed to serve an entire ecosystem of REST and GraphQL APIs, effectively acting as a standardized communication hub. This architecture dramatically simplifies the infrastructure required for AI agent integration, moving away from a fragmented, one-off approach toward a unified and scalable solution.

The tool’s “zero-code” promise is delivered through a feature known as dynamic tool discovery. Instead of requiring manual mapping, APIAgent automatically consumes an API’s existing documentation—either an OpenAPI specification or a GraphQL introspection schema. By analyzing this documentation, it dynamically understands the API’s capabilities, including all available endpoints, parameters, and data structures. It then generates LLM-compatible tools on the fly, instantly making the API’s full functionality available to the AI agent.

APIAgent’s “secret sauce” is its use of an in-process SQL engine, DuckDB, for on-the-fly data processing. APIs frequently return large, raw, or unsorted data payloads that are inefficient and costly to send directly to an LLM. APIAgent intercepts these responses and uses its internal SQL engine to filter, sort, and aggregate the data locally. This ensures that only a concise, relevant, and structured answer reaches the LLM, dramatically enhancing performance and reducing operational costs. To further optimize performance, APIAgent employs a “Recipe Learning” mechanism that caches complex execution plans. When it encounters a recurring type of query, it can bypass redundant LLM reasoning and execute the saved “recipe,” drastically cutting down on latency.

A Security-First Design Philosophy

Built for deployment in complex corporate environments, APIAgent was engineered with a security-first mindset. By default, the tool operates in a strictly read-only mode, permitting only safe data retrieval operations like GET requests. This “safe by default” posture is a critical safeguard, ensuring that an AI agent cannot accidentally modify, create, or delete sensitive data without explicit permission. This default behavior provides a strong security foundation for developers and security teams alike.

Control over potentially mutating operations—such as POST, PUT, or DELETE requests—is managed through a deliberate and explicit whitelisting process. Administrators must define which specific mutating endpoints are safe for the AI agent to access within a clear and auditable YAML configuration file. This mandatory opt-in approach for write-access ensures that every action capable of altering data is a conscious and well-documented decision. This security-centric design allows organizations to deploy APIAgent with confidence, knowing that robust guardrails are in place to protect their critical systems and data integrity.

From Theory to Practice a Three Step Workflow

Implementing APIAgent simplifies the intricate process of LLM integration into a straightforward, three-step workflow. The first step involves deploying a single, reusable instance of the APIAgent proxy. This one-time setup creates the central gateway that will serve as the integration hub for any number of APIs, eliminating the need for repetitive infrastructure provisioning for each new service.

Next, the agent is configured by simply pointing it to the API’s existing documentation. This is accomplished by providing the URL to an OpenAPI specification for a REST API or a GraphQL schema for a GraphQL service in the configuration file. This step is where the zero-code principle comes to life, as APIAgent handles the rest of the discovery process automatically, without any further developer intervention.

The final step is to connect the AI agent to the newly configured APIAgent endpoint. Once this connection is established, the LLM instantly gains access to the full suite of tools derived from the underlying API. This streamlined process transforms a task that previously took days or weeks of custom coding into a matter of minutes, empowering developers to rapidly connect their AI agents to the data and services they need to function effectively. This shift marked a significant advancement in making enterprise-grade AI agents more accessible and scalable.