The era of measuring artificial intelligence by the sheer immensity of its models is rapidly drawing to a close, giving way to a more nuanced and impactful phase of development. For the past several years, the industry has been locked in an arms race, where progress was primarily defined by increasing parameter counts and computational power. However, the coming year is poised to mark a significant inflection point. The relentless pursuit of scale is being replaced by a sophisticated focus on enhancing the core intelligence, capability, and practical utility of AI systems. This transition signals a crucial maturation of the field, moving beyond raw processing power to cultivate reliability, autonomy, and deep integration. The most consequential advancements of 2026 will not be measured in petabytes of data or trillions of parameters, but in the AI’s ability to execute complex, multi-step workflows, learn from experience, and collaborate seamlessly, heralding a new era where “smarter” definitively supersedes “bigger.” This evolution is not a slowdown but a strategic pivot, driven by emerging technical limitations and a growing market demand for enterprise-grade solutions that deliver tangible, consistent value.

The New Pillars of AI Innovation

Democratizing Power and Access

The exclusive domain of powerful foundation models, long held by a handful of technology behemoths, is set to be fundamentally disrupted. A critical shift is occurring in the AI development lifecycle, with the locus of innovation moving from the prohibitively expensive pre-training phase to the more accessible post-training phase. It is in this later stage—where models are refined, specialized, and fine-tuned with curated, task-specific data—that the most significant gains in performance and utility are now being realized. This change radically alters the competitive landscape, creating fertile ground for a new wave of high-performance, open-source models to flourish. These models, built upon a shared and open foundation, can be freely accessed, customized, and enhanced by a global community of developers, researchers, and agile startups. By leveraging this collaborative ecosystem, smaller entities can now create highly tailored and potent AI solutions without the astronomical cost of training a colossal model from the ground up, effectively breaking the established industry oligopoly and accelerating innovation.

This democratization of advanced AI capabilities is projected to unleash a more distributed and accelerated wave of progress across the entire industry. The widespread availability of powerful open-source foundation models removes the primary barrier to entry, allowing innovation to thrive far beyond the walls of large corporate research labs. Startups and academic institutions can now focus their resources on creating specialized applications and solving niche problems with a level of sophistication that was previously unattainable. This fosters a vibrant ecosystem where custom-tailored AI solutions can be developed for specific industries, from healthcare to finance, with greater efficiency and precision. The result is not just more competition but a richer, more diverse AI landscape where the collective intelligence of a global community drives the technology forward, ensuring that breakthroughs are more frequent and their benefits are more broadly shared, moving the industry toward a more equitable and dynamic future of AI development.

Building Autonomous and Reliable Agents

As the incremental improvements in the core reasoning abilities of large language models begin to show diminishing returns, the industry’s focus is pivoting to agentic AI as the next critical frontier. The vision for 2026 is the emergence of intelligent, integrated AI systems, or “agents,” capable of operating with a far greater degree of autonomy. The key enablers for this transformative leap are not simply more powerful processing but significant advancements in two specific areas: context windows and memory. While larger context windows allow models to process more information at once, the fundamental limitation of current AI is its lack of a persistent, working memory. This deficiency severely restricts its effectiveness on complex tasks that unfold over time. By engineering more sophisticated, human-like memory systems, AI agents will gain the ability to retain information from past interactions, learn from their actions, and maintain a consistent understanding of long-term objectives, finally moving beyond single, transactional exchanges to become continuous, collaborative partners.

One of the most significant obstacles preventing the widespread adoption of AI agents in mission-critical enterprise environments has been the persistent problem of error accumulation. In any multi-step workflow, a small inaccuracy in an early stage can cascade and magnify, often leading to a completely incorrect final outcome and necessitating constant human oversight that negates the benefits of automation. The prediction for 2026 is that this challenge will be directly addressed through the development of robust self-verification capabilities. Future AI systems will be engineered with internal feedback loops, allowing them to autonomously assess and validate the accuracy of their own outputs at each stage of a process. These “auto-judging” agents will be able to identify their own mistakes, initiate corrective actions, and iterate on a solution without human intervention. This breakthrough will be transformative, enabling the scalable deployment of AI for complex, multi-hop workflows that are not only powerful but also highly reliable, moving agentic AI from a promising but fragile concept to a viable, trusted solution for enterprise automation.

Redefining Development and Deployment

Transforming Human-Computer Interaction

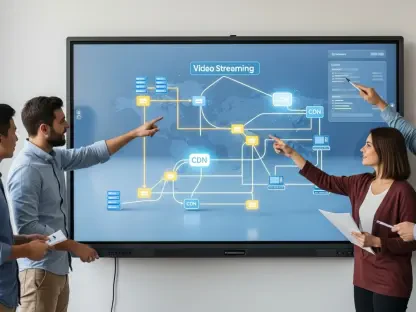

An artificial intelligence’s ability to understand, generate, and execute code represents its most important proving ground for advanced reasoning, forming a vital bridge between the probabilistic, non-deterministic nature of language models and the deterministic, symbolic logic that governs computers. This connection is now poised to unlock a new paradigm in software development: English language programming. In this emerging era, the essential skill for creating software will shift away from mastering the complex and often arcane syntax of traditional programming languages like Python or Go. Instead, the primary skill will be the ability to clearly, precisely, and logically articulate a desired outcome to an AI assistant using natural language. This profound change promises to democratize software development on an unprecedented scale. By 2026, the main bottleneck in product development will no longer be the scarcity of skilled coders but the creative capacity to envision and articulate the product itself, empowering a new generation of creators to build applications.

This fundamental transformation will have far-reaching implications, potentially increasing the number of people who can build and deploy software by an order of magnitude. Individuals with deep domain expertise in fields like finance, medicine, or law, but without formal programming training, will be able to translate their ideas directly into functional applications. This dramatically lowers the barrier to entry for innovation, allowing solutions to be developed by those who are closest to the problems they are trying to solve. For existing developers, this shift liberates them from the more tedious aspects of coding, allowing them to focus on higher-value activities such as system architecture, user experience design, and complex problem-solving. Rather than replacing human developers, this new paradigm will augment their capabilities, accelerating development cycles and enabling the creation of more sophisticated and ambitious software projects than ever before, fostering a more creative and productive technological landscape.

Rethinking the Foundation of AI

The arms race to build the largest possible foundation model by simply adding more data and compute is rapidly approaching its end. In 2025, the industry began to encounter the practical limits of established scaling laws, such as the Chinchilla formula, which have guided model development for years. Several key constraints have emerged as significant roadblocks, including a dwindling supply of high-quality, publicly available pre-training data and the fact that the token horizons required for training these colossal models have become unmanageably long and expensive. The sheer cost and logistical complexity of sourcing both data and computational resources are forcing a strategic reevaluation across the field. Consequently, the relentless pursuit of sheer size will decelerate in 2026, as innovation and resources are rapidly redirected toward more efficient and targeted methods of improving AI performance. This marks a necessary pivot from a strategy of brute force to one of intelligent refinement and optimization.

As the focus shifts away from pre-training, companies will dedicate an increasing share of their compute budgets to sophisticated post-training techniques. Methods like reinforcement learning will be used to make existing models dramatically more capable and efficient for specific, high-value tasks. The competitive differentiator will no longer be who possesses the biggest model, but who can most effectively make their model smarter and more useful for real-world applications. A parallel frontier is the challenge of agent interoperability. Currently, most AI agents operate within closed, proprietary ecosystems, or “walled gardens,” unable to communicate or collaborate with agents from other platforms. Breaking down these silos through the development and adoption of open standards will give rise to an “agent economy,” analogous to how the API economy revolutionized the software industry. In this new economy, AI agents will autonomously discover, negotiate, and exchange services, enabling the automation of complex cross-platform workflows and ushering in the next significant wave of AI-driven productivity.

A Maturation Toward Sophistication

The trajectory of artificial intelligence had clearly pivoted. The industry moved decisively beyond the monolithic pursuit of scale, recognizing that true intelligence was not a function of size but of sophistication, reliability, and integration. The foundational advancements in self-verification, persistent memory, and interoperability had laid the groundwork for an entirely new ecosystem. In this evolved landscape, AI was no longer just a powerful but unpredictable tool; it had become a reliable, autonomous, and integrated partner in innovation and enterprise. This maturation represented the crucial step from promising technology to indispensable infrastructure, setting the stage for a future defined by collaborative and intelligent systems.