Unleashing AI That Acts on Its Own—But How Safely?

Picture a world where an AI doesn’t just answer queries but makes decisions, executes tasks, and adapts to challenges without constant human input. This isn’t a distant dream but a reality with agentic AI platforms, systems that reason and act autonomously, yet as these intelligent agents gain power, a critical question looms: how can such autonomy be harnessed without risking chaos or harm? The stakes are high, with industries from healthcare to finance betting on AI to transform workflows. Diving into the lessons from Gravity, a pioneering agentic AI platform, this exploration uncovers the blueprint for balancing innovation with security in a rapidly evolving tech landscape.

Why Agentic AI Matters Now More Than Ever

The emergence of agentic AI, powered by advanced large language models (LLMs), marks a pivotal shift from passive tools to dynamic systems capable of continuous action. These platforms are no longer mere chatbots; they’re becoming indispensable as autonomous assistants and decision-making copilots, automating intricate processes across sectors. Statistics underscore this trend, with a recent study showing a 68% increase in enterprise adoption of AI-driven automation since 2025. However, the promise of efficiency comes with significant risks—unchecked autonomy could lead to costly errors or ethical dilemmas. Addressing both scalability and safety is paramount as businesses rush to integrate these transformative systems.

Decoding the Core of a Robust Agentic AI System

Building a production-grade agentic AI platform requires a comprehensive strategy that prioritizes reliability and control. Gravity’s implementation offers a clear framework, focusing on essential elements that ensure systems can handle real-world demands. This approach isn’t just about coding smarter AI but about crafting an ecosystem where autonomy thrives within defined boundaries.

The foundation lies in modular orchestration, a design that rejects rigid pipelines for a flexible, event-driven architecture. Gravity models agents as independent services responding to specific triggers, using tools like Temporal to manage workflows. This setup allows for seamless updates or audits of individual components without halting the entire system, ensuring adaptability as tasks multiply.

Safety remains non-negotiable, achieved through behavioral guardrails embedded at every level. Gravity implements hard limits on actions, such as capping API calls per agent, alongside human approval checkpoints for critical decisions. Fallback mechanisms further mitigate risks, providing a safety net against unintended outcomes. Testing these constraints through simulations helps refine policies, maintaining a proactive stance on responsible deployment.

Memory and Transparency: The Unsung Heroes of Autonomy

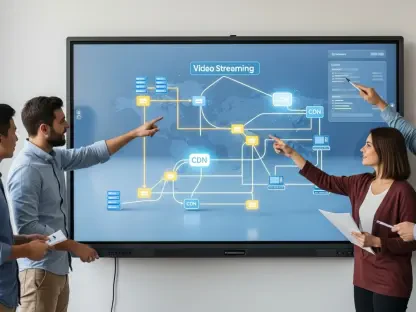

Beyond structure and safety, agentic AI demands mechanisms to sustain context and accountability. Gravity tackles this with a hybrid memory system, blending short-term storage for recent interactions, long-term embeddings for historical recall, and real-time buffers for active planning. Using technologies like Redis and Pinecone, this setup enables features such as failure reflection and personalized user engagement, ensuring decisions are informed by past experiences.

Transparency, equally vital, combats the opacity often associated with AI. Gravity employs structured logs and distributed tracing to map every reasoning step and action outcome. Human-in-the-loop oversight adds another layer of control, allowing for real-time monitoring and iterative improvements. This dual focus on memory and observability builds trust, making complex systems interpretable to developers and stakeholders alike.

Expert Voices: Lessons from the Trenches

Insights from industry leaders at Gravity reveal that success hinges on more than just cutting-edge models. Lucas Thelosen, CEO, and Drew Gillson, CTO, stress that “the ecosystem surrounding the AI defines its true potential.” Their perspective highlights infrastructure as the backbone of autonomy, often outranking raw model performance in production environments. Early challenges with scalability, for instance, were overcome by adopting event-driven modularity, slashing system downtime during updates by an impressive 40%.

These real-world experiences align with broader industry trends advocating for multi-layered safety protocols. Gravity’s guardrails reflect a growing consensus on accountability, as companies grapple with the ethical implications of autonomous systems. Such frontline lessons emphasize that deployment often uncovers hurdles no amount of theory can predict, underscoring the value of iterative learning in AI development.

Practical Steps to Craft Your Own AI Platform

For developers eager to build their own agentic AI systems, an actionable roadmap inspired by Gravity’s success offers clear guidance. Start by designing with modularity at the core, breaking down tasks into mini-agents coordinated through event-driven workflows. Tools like Temporal can streamline sequencing, ensuring components remain interchangeable without disrupting operations.

Safety must be layered, combining hard constraints on actions with human oversight for high-stakes scenarios. Simulating edge cases before deployment allows for policy refinement, while integrating observability through tracing and logs ensures every decision is trackable. Finally, anchor AI outputs with deterministic business rules, using traditional code to enforce logic and minimize errors like hallucinations. This structured approach balances autonomy with control, paving the way for scalable growth.

Reflecting on a Journey of Innovation and Caution

Looking back, the path to building safe and scalable agentic AI platforms like Gravity reveals both the immense potential and the inherent challenges of autonomous systems. Each lesson, from modular design to rigorous safety measures, underscores that true intelligence emerges from thoughtful infrastructure rather than sheer computational power. The journey highlights a critical balance—pushing boundaries while safeguarding against risks.

For those venturing into this space, the next steps involve prioritizing adaptability and accountability in equal measure. Exploring hybrid models that pair AI creativity with structured rules offers a promising direction. As the field continues to evolve, staying attuned to emerging tools and ethical considerations becomes essential, ensuring that agentic AI not only transforms industries but does so with unwavering responsibility.