Today we’re joined by Anand Naidu, a development expert with deep proficiency in both frontend and backend systems. As AI-assisted tools rapidly reshape how software is created, Anand offers critical insights into the emerging practice of “vibe coding” and the often-overlooked security implications that come with this incredible speed.

This conversation will explore the hidden risks behind AI-generated applications that seem to work perfectly right away. We’ll discuss how even non-developers can apply foundational security frameworks to their prototypes, identify essential first-step security checks before an app goes live, and understand when to bring in engineering expertise for more robust, scalable solutions. We will also delve into why tracking the origins of AI-generated code is crucial for long-term maintenance and security.

With a prediction that 40% of enterprise software will be AI-assisted by 2028, this “vibe coding” approach is accelerating development. What is the biggest misconception teams have when an AI-generated app works on the first try, and what initial security mindset shift is required?

The biggest misconception is believing the work is over. That initial success, where the application does exactly what you wanted, feels like a huge win. The speed is intoxicating, and you feel like you’ve reached the finish line. But in reality, the race has only just begun. An application that functions isn’t the same as one that is robust, efficient, or secure. The AI may have built something that looks great but is incredibly fragile at its core. The essential mindset shift is moving from “it works” to “we understand how it could fail.” You have to accept that security isn’t an assumed feature; it’s a responsibility that begins the moment the first line of code is generated.

When a vibe-coded app seems ready, the impulse is to deploy it. Could you walk us through how a non-security professional can use a framework like STRIDE to identify fundamental risks, such as potential data leaks or denial of service vulnerabilities, before going live?

Absolutely. STRIDE is a fantastic mental checklist for anyone, regardless of their security background. Think of it as a guide for asking the uncomfortable questions before an attacker does. For “Spoofing,” you ask, “Can someone pretend to be another user in my app?” For “Information Disclosure,” you check, “Does the app leak sensitive data through error messages, logs, or APIs?” And for “Denial of Service,” you question, “What happens if someone spams requests to my app? Are there rate limits in place to prevent it from crashing?” Walking through each letter of STRIDE—Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, and Elevation of Privilege—forces you to proactively look for weaknesses instead of just admiring the functional parts.

Let’s talk about the first practical steps. After a successful prototype, what are some key security checks a team should perform? For instance, how would they validate that user identities are handled correctly and that no hardcoded credentials have been embedded in the code?

Once the prototype is working, the first thing to do is sanity-check the fundamentals. Before you even think about scaling, you must validate how the application handles identities. This goes back to the “Spoofing” risk in STRIDE; you need to ensure one user can’t easily impersonate another. The second, and critically important, check is for embedded credentials. It’s a classic mistake where a developer—or in this case, an AI—leaves passwords, API keys, or other secrets directly in the code. A quick scan of the codebase for anything that looks like a key or password is an essential, non-negotiable first step. These two checks alone can prevent some of the most common and devastating security breaches.

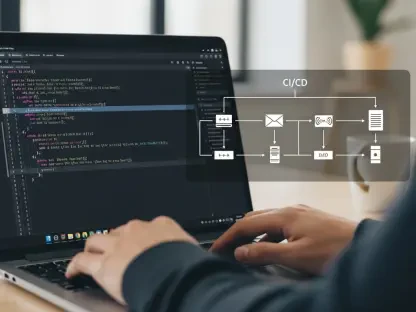

Vibe coding excels at initial builds but can introduce risks when scaled. At what point should an engineer get involved, and what advanced security measures—like CI/CD pipeline checks or software scanning—become non-negotiable for ensuring an application is truly production-ready?

The moment an application moves beyond a simple proof of concept and is intended to support a growing number of real users, it’s time to bring in an engineer. That’s the inflection point where you need to implement more effective threat modeling and automated guardrails. At this stage, several security measures become absolutely non-negotiable. First, you need to use software scanning tools to analyze all the software packages and dependencies your app relies on, checking them for known vulnerabilities. Second, you must integrate security into your CI/CD pipeline. This means implementing automated checks like pre-commit hooks that actively block developers from merging code that contains hardcoded secrets. These practices move security from a manual, one-time check to an automated, continuous process.

Tracking the origin of AI-generated code is crucial for accountability. How can teams effectively use metadata to log which models and tools contributed to the application, and why is this information vital for long-term security maintenance and vulnerability management?

Effective tracking is all about embedding clear metadata with any AI-assisted contributions. This means that for every piece of code generated by an AI, you should log which specific model was used, what version it was, and which LLM tool or platform was involved in its creation. This information is absolutely vital for a few reasons. First, if a vulnerability is discovered in the code-generation patterns of a particular AI model, you need to be able to quickly find every piece of code in your system that was built with it. Without that metadata, you’re flying blind. Second, it’s about long-term maintenance and accountability. Knowing the origin helps you understand why certain code exists and makes it easier to patch, update, or refactor it securely down the line.

What is your forecast for AI-assisted coding?

My forecast is that AI-assisted coding is here to stay and will only become more integrated into every facet of development. The freedom and speed it provides are simply too valuable to ignore. However, the future of this practice depends entirely on our ability to match that speed with an unwavering commitment to security. We’ll see a rise in AI-native security tools that can analyze and secure AI-generated code in real-time. The goal won’t be to slow down innovation but to build a culture where security is a non-negotiable part of the vibe, ensuring that the amazing things we build quickly are also built to last safely.