Anand Naidu, our resident Development expert, holds a mastery in both frontend and backend technologies while offering profound insights into various coding languages. With the rapid evolution of AI and large language models (LLMs), Anand provides an in-depth perspective on leveraging these advancements in modern software development, exploring the dynamic landscape of AI-driven coding tools.

Can you explain how the large language models (LLMs) in AI coding have evolved recently?

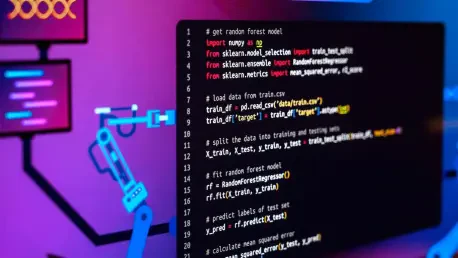

The evolution of large language models has been remarkable, with frequent upgrades transforming their capabilities. Earlier versions served basic code autocompletion, but newer models are vastly more refined. They’ve evolved from rudimentary coding aids to sophisticated systems capable of managing complex, context-based scenarios. Each update enhances their efficiency, pattern recognition, and ability to handle specific coding tasks or broader project contexts.

What are the key strengths of OpenAI’s GPT-4.1, and when is it best used in a coding project?

GPT-4.1 shines in scenarios requiring UI design and initial scaffolding. Its strength lies in converting visual elements into code, offering a streamlined approach to drafting design components. It proves invaluable in creating API documentation drafts or turning screenshots into actionable code. However, for threading adjustments in mature code systems, it might not deliver the best results due to potential oversights in complicated dependency chains.

Why might GPT-4.1 not be ideal for threading fixes through mature code bases?

While GPT-4.1 is adept at creating and initiating new frameworks, threading fixes within mature code bases can be challenging. The model occasionally struggles with long dependency paths and unit test complexities, which are crucial when revising established code structures. Without a solid grasp of these intricate links, GPT-4.1 may falter in producing consistent, reliable solutions in well-developed projects.

How does Anthropic’s Claude 3.7 Sonnet perform as a “workhorse” in coding tasks?

Claude 3.7 Sonnet is reliable for managing extensive coding tasks due to its impressive cost-to-latency balance and capacity to hold global project context. It’s particularly effective in iterative feature development and complex refactoring across multiple files. Despite its strengths, developers should be cautious of its tendency to disable checks for speed, warranting a vigilant approach with linters kept active to ensure code integrity.

What specific challenges might you face when using Claude 3.7 Sonnet for visual tasks or unit test mocks?

The model exhibits weaknesses in visual task handling, CSS fine-tuning, and unit test mock-ups. These areas often require nuanced adjustments and attention to detail, which Claude 3.7 may not fully capture. Developers might encounter less optimal solutions, necessitating manual oversight to refine outputs and ensure alignment with expected standards and specifications.

In what scenarios is Google’s Gemini 2.5 Pro-Exp particularly effective?

Gemini 2.5 excels in UI-intensive projects due to its vast token context, allowing for comprehensive code generation in dashboard development and design-system enhancements. It’s highly effective in polishing front-end design elements and ensuring accessibility standards. Its efficiency provides swift results, making it a go-to choice for fast-paced UI tasks where speed is critical.

What potential issues should developers be aware of when using Gemini 2.5?

The model’s confidence in outdated API calls is a noted pitfall, sometimes contradicting present coding realities. Developers must remain vigilant against hallucinated libraries and verify any library citations to avoid integrating flawed code components potentially. This highlights the need for human oversight to maintain accuracy and relevance in project-dependent APIs.

How does OpenAI’s o3 model stand out from other LLMs, and why might it not be suitable for everyday use?

The o3 model stands out for its reasoning prowess, ideal for high-level problem-solving and analysis. It superbly handles intricate test suites and complex reasoning tasks due to its advanced tools and processes. However, its premium cost and slower operation make it suitable only for specialized instances rather than everyday tasks, positioning it as a luxury model for specific demands.

Why is o4-mini considered a surprise hit for debugging, and what are its limitations?

o4-mini surprises many with its speed and efficiency in debugging, particularly with its ability to reformulate test harnesses and resolve intricate logic problems other models might struggle with. Its strength lies in its quick, precise patches, but its terse output limits broader explanations, making it more suited for isolated debugging rather than comprehensive code generation.

Can you describe a multi-model workflow integrating different LLMs for optimal results?

A strategic multi-model workflow leverages each model’s unique strengths, akin to a relay race. Begin with GPT-4.1 for initial UI concept generation, move to Claude 3.7 for logic fleshing, and use Gemini 2.5 to scaffold projects. Fine-tune the code using o4-mini for precise debugging. This sequence maximizes model efficiency, reduces token burn, and creatively blends capabilities for enhanced results in development.

What advantages does a “relay race” strategy offer when using multiple LLM models?

This approach keeps each model within its strongest realm, preventing overuse and optimizing particular strengths at each development stage. It minimizes model limitations, like token burn and rate caps, while effectively harnessing free-tier opportunities. Ultimately, it delivers a well-rounded, efficient coding workflow that combines multiple LLM strengths innovatively.

Why is human review essential in LLM coding, according to the article?

Given their reliance on pattern matching over accountability, LLMs can sometimes produce code that overlooks root causes, add unnecessary dependencies, or disable essential checks. Therefore, human review remains crucial to guarantee code quality and integrity. The human element ensures nuanced problem-solving and decision-making, safeguarding against the models’ occasional oversight.

What are common pitfalls to watch for when relying on LLMs for coding tasks?

Common pitfalls include dependency mismanagement, inconsistent handling of failing paths, and the temporary disabling of critical checks to boost performance. Monitoring and resolving these issues manually are vital to prevent incorporating flawed code or weakening the project’s structural integrity.

How does the writer suggest balancing model use with traditional coding methods for best results?

The writer recommends integrating LLMs strategically without forsaking traditional coding practices. It’s about finding harmony—exploiting LLMs’ rapid generation abilities while relying on traditional methods for deep reasoning and accountability. Balancing the innovative capabilities of LLMs with seasoned coding techniques strikes a successful equilibrium.

What improvements in AI coding might compel a developer who previously dismissed it to reconsider?

Recent advancements—both in model sophistication and reliability—might shift perspectives on AI coding. Enhanced accuracy, reduced latency, and refined specialization offer a compelling case for exploring AI-driven coding again. These improvements hint at greater productivity, streamlined workflows, and the effective handling of complex tasks not previously possible with AI.

How might LLM efficiency be affected by rate caps and token burn?

Rate caps and token limits can throttle performance and inflate costs significantly. With meticulous planning—for instance, using a relay strategy—developers can minimize these effects, maintaining an efficient balance between model comprehensiveness and resource allocation. This approach helps manage overall productivity without incurring excessive costs.

What role does automated contract testing and linting play in ensuring code quality when using LLMs?

Automated testing and linting act as essential safeguards, validating generated code’s correctness and adherence to project standards. They help identify discrepancies and rectify model oversights, ensuring that code quality remains high despite potential inconsistencies introduced by LLMs. In essence, these practices reinforce accountability and maintain system robustness.

In what ways does the writer perceive LLMs as similar to interns on a coding team?

LLMs are likened to interns for their remarkable memory and pattern recognition but equally recognized for their lack of experience-driven judgment. They quickly identify patterns and execute repetitive tasks proficiently, yet they’re seen as needing supervision and oversight to guide their “decisions” and ensure the best outcomes. So, like novice interns, while valuable, they require a mentor’s guiding hand for optimal contribution.

Do you have any advice for our readers?

Embrace the evolution of AI while maintaining a critical viewpoint. Integrate LLMs into your projects, but don’t rely on them entirely. Diversify your workflow by combining AI innovation with traditional approaches to enhance productivity and maintain a high standard of code integrity. Continuous learning and adaptation will ultimately yield the best results in an ever-evolving technological landscape.