In the rapidly evolving field of artificial intelligence (AI), a notable trend is the increasing adoption of open-source AI models, which represent a shared pool of resources similar to the early days of open-source software (OSS). However, as with any collaborative ecosystem, this burgeoning practice comes with its own set of complexities and security challenges that developers and organizations must address to ensure safe and effective implementation. Open-source AI models allow researchers and developers to build upon each other’s work, accelerating innovation and reducing redundancy. Platforms like Hugging Face have become influential communities where such collaborative development thrives. However, the same interconnectedness that fuels progress also amplifies risks. Whether it’s the infiltration of malicious code or the propagation of vulnerabilities through dependent components, the shared nature of these models makes them susceptible to multi-layered security concerns. More than ever, the need for robust security measures and comprehensive oversight mechanisms is becoming paramount to safeguard this emerging domain.

The Parallel Evolution of AI and Open-Source Software

The development trajectories of AI models and OSS share striking similarities that underscore both their potential and their inherent risks. Both ecosystems thrive on community collaboration, innovation, and shared knowledge, which significantly contribute to their rapid growth and development. The early days of OSS witnessed a surge in community-driven projects, laying the foundation for the software landscape we recognize today. Similarly, the current trajectory of AI development, particularly on platforms like Hugging Face, benefits immensely from collaborative efforts where researchers and developers readily share their models and insights for the collective advancement of the field.

However, alongside the benefits of shared resources comes the peril of cascading vulnerabilities. These shared ecosystems mean that a security flaw in one component can ripple through multiple projects, potentially compromising their integrity. This risk is especially pronounced in AI models due to the complex interdependencies inherent in their design. The collaborative nature that fuels rapid innovation also necessitates stringent security protocols to manage these cascading risks effectively, ensuring that the reliance on shared knowledge does not turn into a vulnerability exploitable by malicious actors.

Rising Security Concerns in AI Development

Security remains one of the foremost challenges in AI development, particularly as open-source models become increasingly popular. The opaque or “black-box” nature of many AI models makes them prime targets for malicious activities, as their inner workings are often not fully transparent or understood. Risks such as malicious code injection and typosquatting—where attackers create similarly named models to deceive users—are growing concerns. Additionally, compromised user credentials can lead to unauthorized access and manipulation of these models, further complicating the security landscape.

Moreover, pre-trained AI models often incorporate multiple dependencies, adding layers of vulnerabilities that are not immediately apparent. These hidden weak points make it difficult for developers to identify and mitigate risks effectively. Consequently, the potential misuse of AI models, which could propagate harmful or biased outputs, exacerbates these security concerns. The need for comprehensive security measures that can address these multilayered challenges is imperative for the continued safe development and deployment of AI technologies.

Endor Labs’ Approach to Enhancing AI Model Security

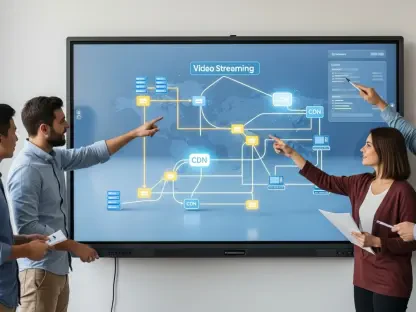

To tackle these security challenges, Endor Labs, a software supply chain security company, has introduced a platform designed to score AI models on Hugging Face based on several critical factors, including security, activity, quality, and popularity. This scoring system aims to provide developers with a reliable metric to evaluate the trustworthiness of AI models without requiring deep expertise in each specific model. By offering an objective assessment of these crucial parameters, the platform helps mitigate the risks associated with open-source AI models.

The scoring system provides a comprehensive view of a model’s reliability. Security assessments are pivotal for identifying potential vulnerabilities within a model. Activity scores reflect the level of recent contributions to the model, ensuring it is actively maintained and not abandoned. Quality evaluations focus on the overall performance and robustness of the model, while popularity metrics gauge the level of trust and use within the community. By combining these factors, Endor Labs’ platform offers a multidimensional assessment that aids developers in making informed decisions about which models to integrate into their projects.

Navigating the Complexity of AI Dependencies

One of the significant challenges in AI development is navigating the complexity of AI model dependencies, often referred to as “complex dependency graphs.” AI models are typically built as extensions of other models, creating intricate networks of interdependent components. This interdependency can complicate efforts to manage and secure AI models effectively, as each model in the graph can introduce new vulnerabilities, making it challenging to maintain a robust security posture across the entire system.

Tools and platforms that offer visibility into these dependencies are essential for developers. They enable a better understanding of how different models interact and help identify potential weak points within this interdependent network. Endor Labs’ scoring system is a step in this direction, providing insights into the dependencies within AI models and equipping developers with the information needed to make more informed and secure decisions. By addressing the complexities of AI model dependencies, these tools contribute to enhancing the overall security and reliability of AI deployments.

Governance and Regulatory Needs in AI Deployment

As AI technologies continue to permeate various sectors, the necessity for robust governance and oversight mechanisms becomes increasingly apparent. Effective governance frameworks are crucial to ensure that AI deployment is not only safe and secure but also ethical and aligned with societal values. This involves setting standards for model transparency, accountability, and security that can guide organizations in their AI development and deployment processes.

Endor Labs plans to expand its scoring system to include models from other platforms and potentially commercial providers like OpenAI. This expansion signifies a broader industry trend towards enhancing the governance of AI models. By establishing comprehensive guidelines and assessments, the industry can foster greater trust and reliability in AI technologies, ensuring that their widespread adoption benefits society as a whole. Effective governance mechanisms are essential to address the complex ethical and security challenges posed by advanced AI systems, paving the way for responsible innovation in this transformative field.

Addressing Vulnerabilities and Risks in AI Models

AI models are particularly susceptible to vulnerabilities due to their reliance on pre-built components and third-party datasets, which can introduce hidden risks. Common storage formats, such as PyTorch, Tensorflow, and Keras, are especially vulnerable to arbitrary code execution. These risks necessitate continuous vigilance and proactive measures to identify and mitigate potential threats, ensuring the secure deployment of AI models in real-world applications.

One of the challenges developers face is that, unlike open-source software, AI models tend to be more static and less frequently updated. This can leave them outdated and potentially insecure over time. Therefore, thorough scrutiny and verification during the initial deployment of AI models are crucial to ensure their ongoing reliability and safety. By proactively addressing vulnerabilities and risks, developers can enhance the security and robustness of AI models, contributing to the overall trustworthiness of AI technologies.

Navigating Licensing Complexities in AI Development

In the fast-moving world of artificial intelligence (AI), one significant trend is the growing use of open-source AI models. These models are akin to early open-source software (OSS) in that they offer a communal pool of resources for all to use. However, much like any collaborative system, this practice brings a unique set of complexities and security challenges. Developers and organizations need to tackle these issues to ensure the models are both safe and effective. Open-source AI models enable researchers and developers to build on each other’s work, speeding up innovation and cutting down on redundancy. Platforms like Hugging Face serve as key communities where this collaborative development flourishes. However, the same networked environment that drives progress also heightens risks. For example, malicious code can infiltrate these shared models, or vulnerabilities can spread through linked components. Hence, the communal nature of these models makes them prone to various security threats. Given this, robust security measures and thorough oversight mechanisms are more crucial than ever to protect this emerging field.