The rapid proliferation of large language models has created an industry-wide push for reliability and scale, yet many applications still behave like unpredictable black boxes, undermining user trust and limiting their enterprise potential. While prompt engineering offered a first step toward taming these powerful systems, a growing consensus among AI developers and strategists suggests that the true key to unlocking next-generation capabilities lies in a more fundamental, architectural discipline. This emerging field, known as context engineering, is being positioned not as an enhancement but as the essential blueprint for building intelligent, dependable, and autonomous AI. To understand this paradigm shift, it is crucial to gather insights from across the industry, examining the core principles, common failure points, and strategic techniques that define this new architectural mandate.

Defining the New Frontier: What Experts Mean by Context Engineering

A Consensus on Moving Beyond Prompting

A prevailing view among AI architects is that the era of treating prompt creation as a standalone craft is rapidly coming to an end. This perspective frames prompt engineering as a valuable but ultimately limited precursor to a more systematic discipline. According to this school of thought, context engineering elevates the practice from the micro-level task of wording instructions to the macro-level science of designing the entire information ecosystem an AI model inhabits. The focus shifts from the prompt itself to the architectural orchestration of grounding data, operational tools, behavioral policies, and the dynamic mechanisms governing what the model sees at any given moment.

This architectural approach is defined by its objective: to systematically distill a universe of potential information down to a curated, high-signal set of tokens that dramatically increases the probability of a desired outcome. Rather than relying on clever phrasing, this discipline involves building robust pipelines that select, filter, and structure information before it ever reaches the model. Proponents argue this is the only viable path toward creating AI systems that are not just occasionally impressive but consistently reliable and auditable, transforming them from creative tools into mission-critical enterprise components.

Contrasting Views on Scope and Implementation

However, not all practitioners define context engineering in the same way. A more tactical viewpoint, often found among teams focused on immediate application development, sees it as an advanced form of Retrieval-Augmented Generation (RAG). In this interpretation, the core of the discipline is perfecting the retrieval mechanisms—optimizing document chunking, improving vector search, and refining reranking algorithms to feed the model the most relevant facts. While this approach is critical, some argue it overlooks the broader strategic elements.

In contrast, a more holistic perspective championed by systems thinkers posits that context engineering encompasses the entire lifecycle of information management. This view extends beyond data retrieval to include the design of long-term memory systems, the strategic decomposition of complex workflows into smaller, contextually-managed tasks, and the enforcement of structured outputs through schemas. This broader definition treats the context window as a managed state, akin to memory in a traditional computer program, which must be carefully orchestrated to enable complex reasoning and autonomous action. This debate highlights a field in active development, with its scope ranging from tactical optimization to a complete reimagining of the AI software development stack.

Unpacking the Components and Perils of an Unmanaged Context

The Multi-Layered Anatomy of AI Understanding

Industry experts widely agree that an AI’s “context” is not a monolithic block of text but a complex, multi-layered construct. One common framework dissects this environment into distinct components, each serving a unique function. At the base lies the system prompt, which acts as the model’s foundational charter, defining its core purpose, persona, and operational guardrails. Layered on top are ephemeral elements like the immediate user query and the conversational history, which provides short-term memory and continuity.

Further enriching this environment are dynamic components that ground the model in reality. Retrieved information, pulled from external knowledge bases, prevents the model from relying solely on its static training data, thereby mitigating hallucinations. Simultaneously, a curated set of available tools—described as functions or API endpoints—grants the model agency, allowing it to interact with external systems. Finally, structured output definitions impose a required format on the model’s response, ensuring its output is predictable and machine-readable. Understanding this layered anatomy is considered a prerequisite for any serious engineering effort.

Identifying the Critical Failure Modes

A significant body of analysis is dedicated to identifying how unmanaged context leads to system failure. One frequently cited failure mode is Context Poisoning, where a single piece of erroneous information—whether a factual error or a model-generated hallucination—is introduced and then treated as ground truth in subsequent reasoning steps. This can cause the system’s logic to derail spectacularly, as each new step builds upon a flawed foundation. For example, a customer service bot that incorrectly records a user’s initial problem might spend the rest of the conversation trying to solve a nonexistent issue, leading to immense frustration.

Other common pitfalls include Context Distraction and Context Confusion. The former occurs when an excessively long or verbose context window causes the model to lose focus, fixating on irrelevant details from past turns instead of addressing the current query. The latter arises when noisy, irrelevant data is injected, such as superfluous search results or tool definitions that are not pertinent to the task. Another critical issue, Context Clash, happens when newly introduced information directly contradicts earlier data, resulting in inconsistent or broken model behavior. Industry leaders caution that applications failing to mitigate these risks will face a significant competitive disadvantage due to their inherent unreliability.

From Theory to Practice: Strategic Techniques in Modern Systems

The Art of Orchestrating Information Flow

To combat these failures, practitioners are developing a robust toolkit of strategic techniques. A foundational practice is selective retrieval, which ensures that instead of being fed an entire knowledge base, the model only sees the most relevant portions. This involves sophisticated methods for chunking documents, using vector embeddings to find semantically similar passages, and then injecting only the top-ranked excerpts into the prompt. This not only improves accuracy but also manages the limited space within the context window.

Another key technique is context compression, where long conversational histories or verbose documents are programmatically summarized to preserve key facts while discarding conversational filler. This prevents the model from getting lost in irrelevant details. A related strategy is workflow decomposition, where a complex problem is broken down into a multi-step process. Each step involves a distinct call to the model with a context window tailored specifically for that sub-task, ensuring only necessary information is present at each stage. This methodical approach is seen as essential for building controllable and predictable systems.

Transforming Generalist Models into Specialized Experts

A central argument from thought leaders is that raw model capability cannot substitute for a well-architected information flow. The process of transforming a generic foundation model into a specialized expert is almost entirely an exercise in context engineering. This begins with the careful curation of knowledge bases and toolsets. By restricting a model’s access to a high-quality, domain-specific set of documents and providing it with a limited but powerful set of relevant tools, developers can shape its behavior and expertise far more effectively than through prompting alone.

For instance, an AI assistant for a software developer is made effective not just by being told it is a coding expert, but by being grounded in the project’s actual codebase, having access to the latest API documentation, and being equipped with tools to run tests or commit code. This curated environment constrains the model’s operational domain, reducing the likelihood of irrelevant or incorrect responses. The consensus is that the true specialization of AI systems in 2026 is happening not at the model training level, but at the architectural level through sophisticated context management.

Context as the Lynchpin for Autonomous AI

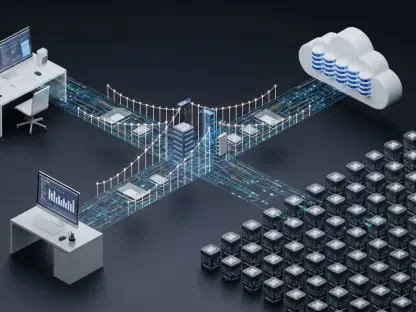

The conversation around context engineering becomes most urgent when discussing the future of AI: autonomous agents. Experts across the board assert that the leap from simple chatbots to goal-oriented agents that can plan, reason, and execute multi-step tasks depends entirely on advancements in context management. A simple question-answering bot has static needs, requiring only the user’s query and perhaps a retrieved document. An agent, by contrast, must manage a dynamic, stateful environment.

This agentic context must include the overall goal, the multi-step plan it has formulated, the results of previous actions, and the set of tools available for the next step. It is a constantly evolving workspace that the agent uses to reason about its progress and decide its next move. The engineering challenge lies in maintaining this complex state without succumbing to the context failures described earlier. Therefore, progress in context engineering is seen as a direct driver of progress in agentic AI, fueling the evolution from task-specific assistants to truly autonomous systems that can manage long-horizon objectives.

Building the Blueprint: A New Architectural Mandate

Actionable Takeaways for AI Teams

As the principles of context engineering solidify, a clear set of actionable takeaways has emerged for developers, product managers, and AI strategists. First, teams were advised to shift their mindset from prompt-centric design to an architectural one, viewing the prompt as just one component in a larger information delivery system. Best practices now included the systematic design of knowledge bases and the rigorous testing of retrieval systems to ensure high-signal, low-noise grounding data.

Furthermore, implementing robust context management systems became a priority. This involved developing strategies for summarizing conversation history, prioritizing information within the context window, and decomposing complex user requests into smaller, manageable workflows. For product managers, this meant defining success not just by the quality of a single response but by the reliability of the system over long and complex interactions. For AI strategists, the imperative was to invest in the underlying data and systems architecture, recognizing it as the primary driver of competitive differentiation.

The Future of AI: Is the Architecture Around the Model

The discussions and developments over the past few years have solidified the view that context engineering is not an optional add-on but a fundamental paradigm shift in AI development. The long-term implications for building trustworthy and scalable systems were profound. By treating the informational environment with the same rigor as the model itself, organizations were finally able to move beyond proofs-of-concept to deploy AI applications that could be trusted with critical business processes.

Ultimately, the consensus that formed was clear: the next great frontier of AI innovation would not be found solely in developing larger or more powerful models. Instead, it would be realized in the sophisticated architecture built around those models. The ability to masterfully manage the flow of information—to provide the perfect context at the perfect moment—was the defining characteristic of truly intelligent systems. This architectural mandate redefined the future of AI development, making it a discipline of systematic engineering rather than unpredictable artistry.