Listen to the Article

AI coding assistants are now a standard part of the modern developer’s toolkit. Large language models accelerate development cycles by handling code completion, debugging, refactoring, and documentation.

But the rapid saturation of the market creates a challenge in choosing the right AI model. The “best” tool is rarely universal. It depends entirely on your team’s specific needs, balancing accuracy, cost, security, and workflow integration.

This guide moves beyond theoretical benchmarks to offer a practical framework for evaluation. It compares leading AI models, examines the differences between open-source and proprietary options, and outlines the non-negotiable requirements for enterprise adoption.

The Core Problem: Beyond Code Generation

AI coding models excel at generating code, but generation alone doesn’t solve the real challenge. The problem lies in context.

Most developers interact with these models through IDE plugins, where tools like JetBrains AI Assistant bring AI directly into the development workflow. But here’s the tension: an AI assistant’s value isn’t measured by how well it writes a function in isolation. It’s measured by whether it understands your project: its structure, dependencies, existing patterns, and constraints.

Early adoption focused on individual productivity gains: faster autocomplete, quicker boilerplate. But as professional teams scaled their use of AI tools, they encountered friction. Code that works in a vacuum often breaks in production. Security vulnerabilities slip through. Context gets lost between conversations. Recent analysis shows that nearly 85% of developers now use AI tools, yet adoption criteria have sharpened considerably. Teams aren’t asking “Can it write code?” anymore. They’re asking: “Can it understand our codebase? Can we trust it? Does it fit our workflow?”

Comparing the Leading AI Models

Developers today do not rely on a single LLM. Instead, they select from a handful of leading models based on the specific task at hand. The choice often comes down to a trade-off between raw performance, context handling, cost, and data privacy.

OpenAI’s GPT Models: Models like GPT-4o are widely recognized for their powerful reasoning and code generation capabilities. They excel at complex tasks like refactoring legacy code, generating unit tests, and explaining intricate algorithms. Their strength lies in high accuracy and versatility, which has led to widespread adoption for many daily development activities. The primary trade-off is cost, as high-volume usage can become expensive for large teams.

Anthropic’s Claude Models: Claude 3 Opus has carved out a niche with its exceptionally large context window. This makes it ideal for developers working with extensive codebases, monorepos, or dense technical documentation. Its ability to reason over long inputs makes it a top contender for deep code analysis and comprehensive documentation tasks.

Google’s Gemini Models: Gemini 1.5 Pro is a strong performer, particularly within the Google Cloud ecosystem. Developers often use it for workflows that blend coding with research, documentation, and cloud-native development. It offers a compelling balance of speed and accessibility, but organizations requiring deep customization or private, on-premises deployments may find it less flexible.

Open-Source and Self-Hosted Models: Models like Llama 3, StarCoder, and DeepSeek are rapidly gaining traction, especially in organizations with strict security requirements. These models offer maximum control over data and infrastructure. While they eliminate licensing fees, operational effort for setup, maintenance, and fine-tuning can be significant. They are best suited for teams with mature DevOps practices and a clear need for data sovereignty.

A Framework for Evaluation

Choosing an AI assistant requires moving beyond marketing claims and focusing on the factors that directly impact your workflow and business goals. The ideal model for a solo developer optimizing a personal project is fundamentally different from one required by a regulated enterprise.

Accuracy and Reliability: The primary criterion is output reliability under real-world constraints. Does the model generate code that is correct, secure, and maintainable? Evaluate this by testing models on real-world problems specific to your domain. A model that performs well on generic web development tasks may struggle with the nuances of embedded systems or financial algorithms.

Workflow Integration: Native integration into the IDE is critical. The tool should feel like a natural extension of the editor, not a separate application that requires context switching. Models supporting multiple backends allow teams to switch LLMs without disrupting workflows.

Security and Data Privacy: For professional teams, how a model handles proprietary code is critical. Before adopting any tool, verify data retention policies, encryption standards, and options for local or private execution. A recent survey found that more than 60% of organizations rank data security as their top concern when evaluating AI tools. This has fueled the adoption of self-hosted models and enterprise-grade assistants that guarantee code and prompts are not used for training external models.

Total Cost of Ownership: Pricing extends beyond the per-token cost of an API call. For cloud models, budget for scaling usage across the team. For open-source solutions, factor in infrastructure, maintenance, and engineering time for setup and fine-tuning. A seemingly “free” model can become expensive once operational overhead is considered.

Enterprise Readiness: From Productivity Tool to Governed Platform

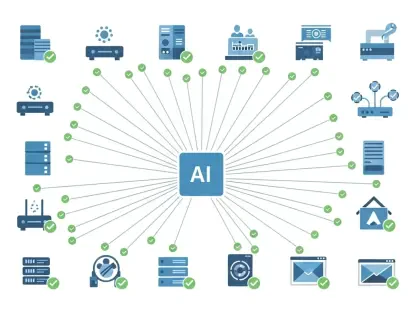

For an AI coding tool to be viable at the enterprise level, it must adhere to strict security and compliance standards. Key capabilities for enterprise adoption include:

Deployment Flexibility: The ability to run the model in the cloud, a virtual private cloud, or fully on-premises to meet data residency and compliance needs.

Role-Based Access Control: Centralized identity management ensures only authorized users can access the tool.

Audit and Traceability: Comprehensive logs support compliance audits for standards like SOC 2 or ISO 27001.

Policy Enforcement: Automated guardrails enforce coding standards, block deprecated libraries, and prevent sensitive data in prompts.

Security incidents related to misconfigured AI are rising, making this a board-level concern.

Conclusion

AI coding tools have moved beyond autocomplete. The meaningful differentiators now are context depth, enterprise governance, and workflow integration. Teams that succeed with AI are not chasing features. They are solving for security, measuring real productivity gains, and aligning tools with existing architecture.

The gap between individual productivity and enterprise viability remains wide. As adoption scales, the tools that address compliance, auditability, and context management will separate from those optimized purely for code generation. The question is no longer whether to adopt AI coding tools, but which trade-offs your organization is prepared to make.